November 26, 2025

The hidden AI layer between your brand and your buyers

Every marketer I speak to is talking about how they use AI.

Very few are talking about the AI they cannot see.

While we are all busy playing with tools and prompts, LinkedIn, Meta, TikTok, YouTube, Reddit, X, Snapchat and Pinterest are quietly rebuilding their products around AI. Not as a feature, as the fabric.

That AI now sits between your brand and your buyers.

It decides whose feed you appear in, which post or person gets recommended, how your content is summarised, and whether your beautifully shot video is watched in full, skipped, or used as training data for the next model.

This newsletter is not about how you use AI.

It is about how the platforms are using AI on you, and what that means for the way you plan, create and measure social. (Oh and there is an Ai generated video, at the end, which is rather good and worth a listen of you prefer that to reading)

The new gatekeeper: recommendation engines on steroids

Let’s start with the invisible bit.

Every social platform now runs on AI-first recommendation systems. They are doing a frankly bonkers amount of heavy lifting for you, whether you like it or not. They are multi-modal (they read text, images, video, audio and on-screen text), graph-based (they understand networks of people and entities) and real time (they adjust to behaviour on the fly).

Under the bonnet, they are chewing on a few big categories of data:

- behavioural signals – clicks, likes, comments, saves, shares, dwell time, watch time, search queries

- social graph – who you are connected to, who you work with, who you follow, what groups and communities you sit in

- content inventory – posts from connections, pages, groups, creators, trending topics

- context – time of day, device, language, rough location, recent activity

- profile data – job title, skills, industry, seniority, interests, education, and how complete your profile is

Every time a buyer touches social, they are feeding these systems.

On LinkedIn, that graph is very deep. Jobs, companies, skills, endorsements, recommendations, who views what, who applies where. All stitched together to decide which posts, people and brands look relevant.

On Meta, cross-app signals flow between Facebook, Instagram and Threads. Watch a set of Reels, save a carousel, tap through Stories, and you will see the impact in your Facebook feed and ad recommendations later.

TikTok and YouTube lean hard into video and audio understanding. They are parsing speech, text overlays, objects in frame and even editing style to guess what a clip is really about and who is likely to care.

For marketers, the important bit is this, and it is a bit of a gut punch:

You are training these models every day, whether you choose to or not.

If your content creates clear, consistent behavioural signals with the right people, the AI has an easier time learning who should see you. If your content is vague, inconsistent, or aimed at “everyone”, you are effectively throwing noisy data at the algorithm.

Agents in the feed (not the 007 type though)

The next big shift is agents. Little AI sidekicks quietly wedged between you and your buyer, doing a chunk of the thinking before a human even gets a look in.

Platforms are starting to give users AI assistants that sit inside the experience. That changes how people search, learn and decide.

On LinkedIn, the AI stack has grown from simple prompts to full workflows.

- Hiring Assistant acts like an AI recruiter. It can understand a role, search semantically for candidates, shortlist, and draft outreach.

- AI-assisted search lets you forget Boolean strings and filters. You can type “B2B buyers at UK banks” and get a curated list.

- AI messaging helps recruiters and sales teams tailor outreach, increasing acceptance and reply rates.

- Job seeker tools and skill simulations act as a coach, suggesting learning paths and practising workplace scenarios.

- Underneath it all, LinkedIn’s professional graph is now plugged into Microsoft 365 Copilot, and in Europe they have started training models on EU user data for generative products.

Meta is trialling Project Luna, a personalised morning briefing that chews through your Facebook feed and external sources, then gives you a tailored update. Design it well and this becomes the place a lot of people start their day.

Reddit Answers takes a user’s question and summarises the best bits of relevant threads. YouTube is testing conversational tools that let you ask questions about the video you are watching and get an instant answer.

X is wiring its Grok assistant directly into live platform data, so users can query trends and conversations in near real time.

In all these cases, the pattern is the same.

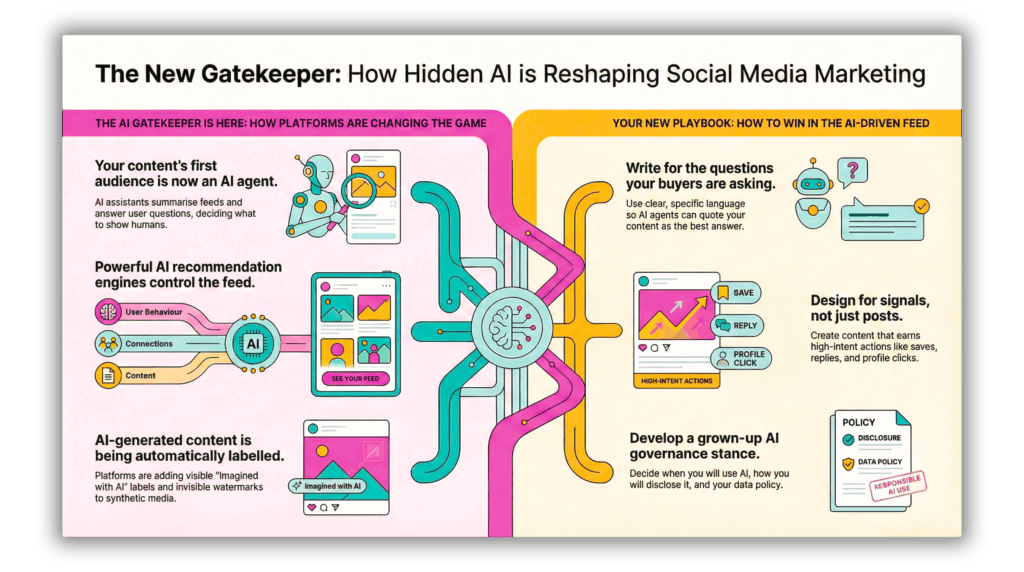

The first “audience” for your content is no longer the human scrolling the feed.

It is the AI agent deciding what to show, what to quote, and which examples to surface when the human asks a question.

That means your job is shifting from “how do we get this post in front of people” to “what questions do we want to be the answer to, and what does our content need to look like so the AI chooses us”.

Your AI content is wearing a badge

Synthetic media is also getting its own visible and invisible markers.

TikTok has gone hard on this. They are using C2PA Content Credentials to detect AI-generated content and automatically label it. They are also rolling out invisible watermarks for AI-made content, so it can be detected even after editing or re-upload.

On top of that, TikTok now lets users choose how much AI-generated content they want in the For You feed. You cannot switch it off completely, but you can turn the dial down.

Meta is labelling photorealistic AI images as “Imagined with AI” and adding AI information labels to ads that use its generative tools. The direction of travel is towards detecting and labelling AI video and audio as the tech matures.

Instagram is blending AI editing tools for Reels with clearer transparency tags. Pinterest has its own “AI modified” labels and a tuner that lets people reduce AI content in certain categories.

You can feel the pattern. The genie is out of the bottle and it is not going back in, however much we might occasionally wish it would sod off for a bit.

AI content will carry a badge.

Users will have at least some control over how much of it they see.

For brands, that forces some choices.

You need to know when you are happy to carry that AI label. Is there risk in AI design? Playful creative, concept visuals, speculative ideas, synthetic environments. Fine. Executive thought leadership, customer stories, crisis responses. Probably not.

It also means your AI and disclosure policies cannot stay in a drawer. Your audience will see the “AI” badges. Your legal and PR teams will too.

Data, consent and the slightly awkward questions

All this AI power is fuelled by data.

The platforms are pushing harder into what they remember about people, and regulators are starting to take an interest.

LinkedIn’s move to train generative AI products on European user data triggered a very public privacy debate. It is a signal of where the line might be, and how quickly regulators will react when platforms cross it.

Meta is rolling out “memory” for its Meta AI assistant. The idea is that it remembers what you like, what you ask, and what you do across Facebook, Instagram and WhatsApp, in order to give better recommendations.

Snapchat’s My Selfie feature lets the app create an AI version of your face. In some cases, Snap reserves the right to use that AI likeness in personalised ads shown only to you.

From a pure product and ad-tech point of view, all of this makes sense.

From a brand and trust point of view, it gets spicy. This is the sort of thing that blows up into a “what the hell were we thinking” moment if you do not get ahead of it.

CMOs now need a clear view on where they are comfortable playing.

- Are you OK with ads appearing alongside highly personalised AI experiences that use someone’s likeness.

- Do you have a position on how your own data and assets can be used in training.

- Have you thought about how you explain this to customers if you are ever asked.

These are governance questions as much as media questions.

Yes, there is a performance upside

So far this sounds a bit gloomy. It really is not. This is not a “run for the hills” moment, it is a “get your shit together” moment.

Handled well, AI-shaped platforms can make your social work harder and smarter.

On personalisation, better recommendation and delivery typically nudges conversion up. Studies across media and ecommerce consistently show 5-20% uplift ranges when AI-driven targeting is working properly, especially at scale.

On efficiency, AI-assisted creative and optimisation can take big chunks out of your production and testing cycle. LinkedIn’s AI messaging, Instagram’s auto-editing and YouTube’s AI tools for titles and thumbnails are all designed to reduce time from idea to live.

On measurement, we are seeing more granular attribution inside platform analytics. AI-driven models are better at connecting the dots between exposures, saves, clicks and conversions.

The upside is real.

The risk is when teams treat that upside as an excuse to automate absolutely everything, and then wonder why all their content feels beige, samey and a bit crap.

The win comes when you use AI to take the grind out of production and optimisation, and reinvest that time into better ideas, sharper points of view, clearer offers and bolder creative.

What CMOs actually need to change

If you strip away the hype, a few practical shifts stand out.

First, your content and profiles need to be answer-friendly.

Write for the questions your buyers and their AI sidekicks are asking.

That means:

- clear, specific language on profiles and pages

- canonical answers to common, high-intent questions that AI can quote

- posts that actually resolve a problem or tension, not just tease

Second, you need to design for signals, not just posts.

Pick the behaviours that really matter to your pipeline on each platform. Saves, sends, replies, profile clicks, branded search, community joins. Then design content that earns those signals, consistently, from the right people.

Third, you need a grown up AI governance stance.

Decide:

- when you will and will not use AI in content

- how you will disclose AI involvement

- what your position is on synthetic people, voices and likeness

- how you’ll respond if an AI label or data issue becomes a public conversation

Fourth, you probably need to tweak your team shape.

You still need the creatives, storytellers and social natives. But Ai is now part of the team

You also need people who:

- understand how recommendation systems behave

- can monitor and interpret platform AI updates

- can translate that into simple guardrails and playbooks for the rest of the business

A small, sharp team that combines creative, data and strategy around an AI-aware playbook will absolutely wipe the floor with a big, generic content factory every single time.

None of this requires a giant transformation programme.

It does require an honest look at where AI already touches your social, and a plan that keeps you in the driving seat while the platforms race ahead.

If you want a sober, non-hype view of how the hidden AI layer is already shaping your 2026 pipeline, that is the work we are doing with brands every week.

Come and have a proper chat about it.