November 5, 2025

Be relevant, spend smart and go synthetic on social

Relevance is everything in social. If the post doesn’t feel made for them, it’s gone in a flick. Knowing your audience is the difference between polite impressions and commercial impact.

Synthetic personas let us speak to the audience before the audience sees a thing. You build interviewable models from your first‑party signals and social listening. Then pressure-test the hook, the visual and the message. You can ask for objections, cultural tripwires and likely comments.

It’s relevance at speed without burning budget.

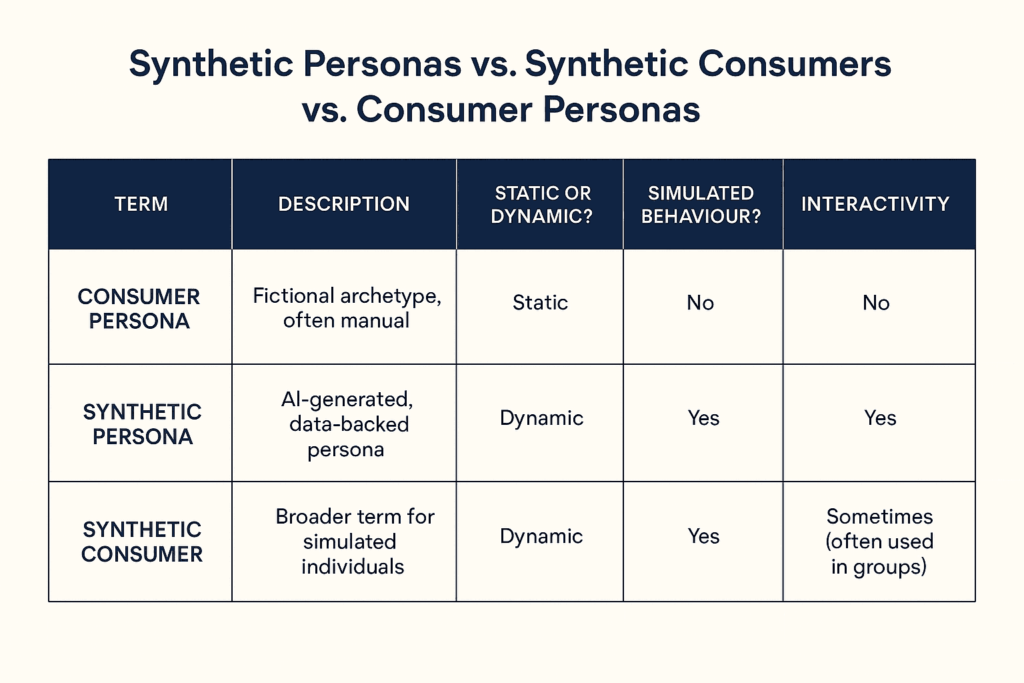

What we mean by “synthetic personas”

These are interviewable, AI‑generated stand‑ins for your real audience segments. You build them from your own data (CRM, web analytics, surveys), bought in research like GWI and social listening like Brandwatch. So they reflect what people actually say and do.

Think of them as a safe rehearsal version of your customers. You can brief them like customers, run scenarios, and ask why a hook lands or a slogan jars. For social, they flag platform fit, tune language for saves and sends, surface likely objections and cultural tripwires, and rank the posts worth backing with budget.

They aren’t static slides; they’re working models that answer back. See definitions and how‑tos from Nielsen Norman Group, Delve AI, and Stravito.

Why marketers need to care now

- Speed and cost are the most important. You need answers this week, not next quarter. Bain reports that when teams layer synthetic on top of human research, tests run in half the time at roughly one third of the cost. That means more ideas tested before media or production spend. (Bain)

- You do not have to trust gut feel. Stanford/DeepMind show that interview‑seeded agents match human survey answers at about 85% “as‑good‑as‑self” accuracy. Use that as a first pass, then sense check the winners with real people. (Stanford HAI explainer / policy brief)

- Market momentum beyond the headlines. 71% of researchers expect synthetic responses to make up the majority of data collection within three years, and 69% say they used synthetics in the past year.

Ipsos reports +0.9 correlation between human‑only and human+synthetic product tests, with synthetic data making subgroup differences significant in 60% of cases. Analysts forecast the synthetic‑data market to grow from $0.4bn (2025) to $4.4bn (2035). (Qualtrics; Research Live; Ipsos infographic; Future Market Insights)

Synthetic lets you explore more ideas, faster, before you put hard cash behind media or production.

Where synthetic personas make the biggest difference on social

Test it before you post it

Run your hooks past three personas and ask, plainly: “would you save this?” “would you send it to a colleague?” If the answer’s meh, fix it before the feed sees it. Use them to spot words or visuals that could grate or offend, and nudge your idea toward something people want to pass on.

Format and message fit by platform

- LinkedIn: stress‑test an exec post with a CFO‑sceptical persona. Tighten the proof points. Then give it a tiny paid nudge to check reality.

- Instagram/Reels: try three hooks and two caption styles. Ask which one triggers a save.

- TikTok: simulate likely stitches/duets and the first wave of comments. Prep your rebuttals before you hit publish.

Rapid iteration without real budgets

Spin 10–20 variants super fast. Keep the best two and bin the rest. Then run a tiny paid split to check the signal.

Audience targeting refinement

Use synthetic personas to turn the data you already have (social analytics, CRM, reviews) into practical targeting moves: which segments to prioritise, what interests/keywords to include or exclude, and where to spend (platforms and placements).

Cross‑market and multicultural checks

Check tone, imagery and claims across cultures and languages before you localise. It’s cheaper to fix a line here than to apologise later.

Turn research into live conversations

This is research you can talk to. Before you post, sit a persona down, run a quick mock focus group, and ask it to push back. It helps you find language people want to save or send, flags tripwires that could spark a pile‑on, and gets you to stronger options fast, without burning budget.

Practical plays:

- Objection hunt: “Tell me why this post would annoy you.”

- Language polish: “Rewrite the CTA for a time‑poor CFO.”

- Edge scan: “Which phrases risk negativity in the comments?”

- Proof tune: “Which proof point would make you trust this more?”

Opposing views to keep us sharp

- Pro: Better research hygiene. You get reasoned answers in minutes

- Con: Without grounding, you’re chatting to a clever mirror. Build on first‑party data .

A solid benchmark, still needs a sense‑check

The best‑evidenced setups (interview‑seeded agents) match human survey answers with ~85% “as‑good‑as‑self” accuracy. (Stanford HAI) Evidenza reports ~95% alignment in brand‑survey comparisons. Synthetic Users reports 85–92% parity between synthetic and organic interviews.

How to use that in social:

- Treat synthetic chats as first thought and support, not the final truth.

- Always run a small human validation (e.g., low‑spend post boost or creator cell) before full roll‑out.

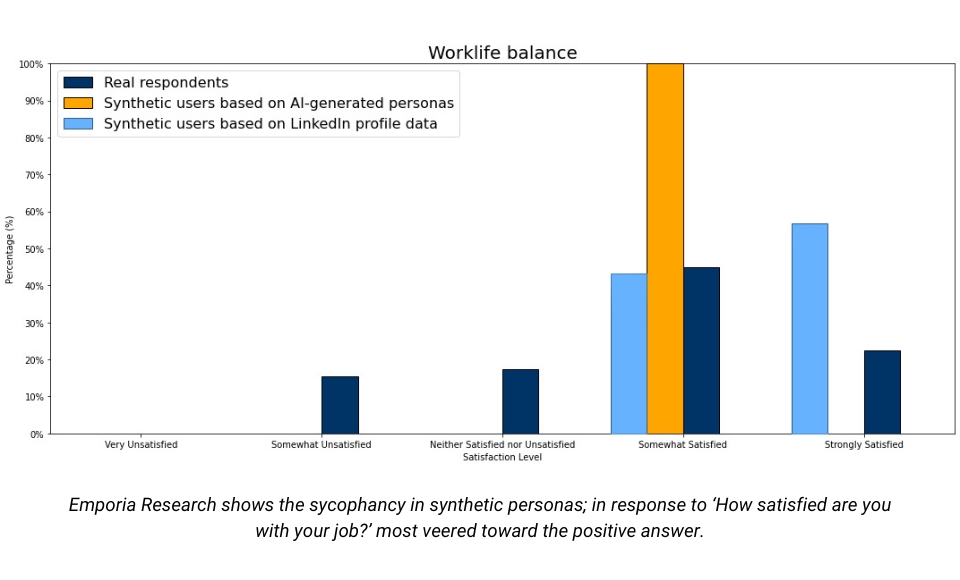

Methods that work (so you don’t get AI‑sycophancy)

Ground your personas with retrieval‑augmented generation (RAG) using your own evidence (VoC, reviews, call notes, social comments, past posts and performance).

In practice, you point the model at an indexed library (a “vector” database). When you ask the persona a question, it first retrieves the most relevant snippets and then answers with those facts in view. Ideally citing them too. That keeps answers faithful to your data, reduces hallucinations, and lets the persona reflect what customers actually say right now.

Start with a small, high‑quality corpus (your top FAQs, strongest comments and transcripts), expand monthly, and make sure permissions are clear for anything containing personal data. (Google Cloud on RAG)

Set-up tips

- Index the right stuff, not everything. Start with FAQs, top-performing posts, high-signal comments, CS notes and sales call transcripts. Put them in a vector store. Tell the agent to cite when unsure so you can see where an answer came from.

- Bake in dissent. Add a standing prompt like: “Now argue against this like a sceptical CFO/engineer/parent.” Rotate personas with different stances. It reduces AI sycophancy and surfaces risks early.

- Keep a human hold-out. Take the top two ideas and run a tiny paid split or 5–10 quick interviews. If humans disagree with the persona, go with the humans and update the corpus.

You can read more on the basics of set up in this article

Biases and other catches (read this bit)

- Virtue bias (over‑good answers): NIQ found synthetic respondents overweighted health concerns versus real people. Hehe, the classic “tell you what sounds right” effect. Anchor to real signals (click‑through, saves, purchases, call notes) and force trade‑offs (“taste vs health”, “price vs convenience”) so answers mimic real choices. (NIQ)

- Prompt sensitivity & false certainty: Small wording changes can swing outputs, and default settings often produce neat, overly confident clusters. Run variation (multiple prompts, seeds and temperatures), inject dissent (“argue against this”), and ask for uncertainty (“what would change your mind?”) to expose spread, not just a tidy mean.

- Garbage‑in, garbage‑out: Personas mirror the data you feed them. If inputs are generic or stale, outputs will be beige. Start with fresh first‑party signals, diversify sources (reviews, comments, CRM, support tickets), and time‑stamp your corpus so you keep it current.

Opposing views

- Pro: Bias can be measured and corrected with reweighting, post‑stratification, diversity checks and dissent prompts. So synth becomes a reliable first pass.

- Con: Even with controls, edge cases and minority voices can be under‑represented. Treat synthetic as directional until a human or field data says it holds.

Synthetic personas don’t replace your audience. They bring your research to life in the messy middle, so you arrive at the real world with sharper ideas, fewer blind spots and better odds. Treat them as rehearsal, and your social will feel smarter, kinder and more effective where it counts, in the feed.

Want to try this with your next launch?

Reply to this email or book a quick chat and we’ll set up a grounded synthetic panel for your top segments

FAQs on Synthetic personas

What are synthetic personas for social?

Synthetic personas are interviewable, AI-assisted stand-ins for your real audience segments. They’re built from your first-party data and social listening so they reflect what people actually say and do. You can pressure-test hooks, visuals and claims before the feed sees them, surface likely objections and cultural tripwires, and rank the ideas worth backing.

At social media agency, Immediate Future, we use them as a fast, low-waste rehearsal layer, then sense-check the winners with a small human test. Net result: higher relevance, fewer flops, smarter spend. Serious Social for Growth.

How do I get started with synthetic personas?

Pick one priority segment. Ground a persona in your signals (CRM, analytics, reviews, transcripts) and fresh social listening. Run a simple flow: test 10 hooks × 3 visuals × 2 CTAs; ask for objections and first-wave comments; choose the best two; validate with a tiny paid split.

Keep your source library small and high quality, refresh monthly, and log prompts and sources for audit. Immediate Future (best social media agency), can set this up fast and fold the learnings back into your content playbook so each round gets sharper.

What are the risks and how do I manage bias?

Expect positivity bias and prompt sensitivity. Personas can give “sounds good” answers and cluster too neatly. Fix that by anchoring to behaviour (saves, sends, clicks, purchases), forcing trade-offs (price vs convenience), adding dissent prompts (“argue like a sceptical CFO”), and keeping a human hold-out to verify the winners. Keep your corpus current and diverse.

At social media agency Immediate Future, we combine these guardrails with a quick real-world check so synthetic insights guide, not mislead. It’s a first pass.

How should I measure success on social with synthetics in the loop?

Judge on signals that matter to pipeline and trust: saves, sends, dwell, and branded search lift. Use a small, matched-audience split to confirm that synthetic rankings predict real engagement.

Where reality differs, capture the gap, update the persona’s sources, and rerun. Over time you should see fewer misses, faster learning cycles and tighter creative. Social media agency, Immediate Future tracks these intent metrics and ties them to outcomes, so your synthetic layer drives practical improvements.